Nomad Variables

Most Nomad workloads need access to config values or secrets. Nomad has a

template block to provide such configuration to tasks,

but prior to Nomad 1.4 has left the role of storing that configuration to

external services such as HashiCorp Consul and HashiCorp Vault.

Nomad Variables provide the option to store configuration at file-like paths

directly in Nomad's state store. You can access these variables directly from

your task templates. The contents of these variables are encrypted

and replicated between servers via raft. Access to variables is controlled by

ACL policies, and tasks have implicit ACL policies that allow them to access

their own variables. You can create, read, update, or delete variables via the

command line, the Nomad API, or in the Nomad web UI.

Note that the Variables feature is intended for small pieces of configuration data needed by workloads. Because writing to the Nomad state store uses resources needed by Nomad, it is not well-suited for large or fast-changing data. For example, do not store batch job results as Variables - these should be stored in an external database. Variables are also not intended to be a full replacement for HashiCorp Vault. Unlike Vault, Nomad stores the root encryption key on the servers. See Key Management for details.

ACL for Variables

Every Variable belongs to a specific Nomad namespace. ACL policies can restrict

access to Variables within a namespace on a per-path basis, using a list of

path blocks, located under namespace.variables. See the ACL policy

specification docs for details about the syntax and structure of an ACL policy.

Path definitions may also include wildcard symbols, also called globs, allowing a single path policy definition to apply to a set of paths within that namespace. For example, the policy below allows full access to variables at all paths in the "dev" namespace that are prefixed with "project/" (including child paths) but only read access to paths prefixed with "system/". Note that the glob can match an empty string and all other characters. This policy grants read access to paths prefixed with "system/" but not a path named "system" (without a trailing slash).

namespace "dev" {

policy = "write"

capabilities = ["alloc-node-exec"]

variables {

# full access to variables in all "project" paths

path "project/*" {

capabilities = ["write", "read", "destroy", "list"]

}

# read/list access within a "system/" path belonging to administrators

path "system/*" {

capabilities = ["read"]

}

}

}

The available capabilities for Variables are as follows:

| Capability | Notes |

|---|---|

| write | Create or update Variables at this path. Includes the "list" capability but not the "read" or "destroy" capabilities. |

| read | Read the decrypted contents of Variables at this path. Also includes the "list" capability |

| list | List the metadata but not contents of Variables at this path. |

| destroy | Delete Variables at this path. |

Task Access to Variables

In Nomad 1.4.0 tasks can only access Variables with the template block. The

workload identity for each task grants it automatic read and list access to

Variables found at Nomad-owned paths with the prefix nomad/jobs/, followed by

the job ID, task group name, and task name. This is equivalent to the following

policy:

namespace "$namespace" {

variables {

path "nomad/jobs" {

capabilities = ["read", "list"]

}

path "nomad/jobs/$job_id" {

capabilities = ["read", "list"]

}

path "nomad/jobs/$job_id/$task_group" {

capabilities = ["read", "list"]

}

path "nomad/jobs/$job_id/$task_group/$task_name" {

capabilities = ["read", "list"]

}

}

}

For example, a task named "redis", in a group named "cache", in a job named "example", will automatically have access to Variables as if it had the following policy:

namespace "default" {

variables {

path "nomad/jobs" {

capabilities = ["read", "list"]

}

path "nomad/jobs/example" {

capabilities = ["read", "list"]

}

path "nomad/jobs/example/cache" {

capabilities = ["read", "list"]

}

path "nomad/jobs/example/cache/redis" {

capabilities = ["read", "list"]

}

}

}

You can provide access to additional variables by creating policies associated with the task's workload identity. For example, to give the task above access to all variables in the "shared" namespace, you can create the following policy file:

namespace "shared" {

variables {

path "*" {

capabilities = ["read"]

}

}

}

Then create the policy and associate it with the specific task:

nomad acl policy apply \

-namespace default -job example -group cache -task redis \

redis-policy ./policy.hcl

Priority of policies and automatic task access to variables is similar to the ACL policy namespace rules. The most specific rule for a path applies, so a rule for an exact path in a workload-attached policy overrides the automatic task access to variables, but a wildcard rule does not.

As an example, consider the job example in the namespace prod, with a group

web and a task named httpd with the following policy applied:

namespace "*" {

variables {

path "nomad/jobs" {

capabilities = ["list"]

}

path "nomad/jobs/*" {

capabilities = ["deny"]

}

}

}

The task will have read/list access to its own variables nomad/jobs/example,

nomad/jobs/example/web, and nomad/jobs/example/web/httpd in the namespace

prod, because those are more specific than the wildcard rule that denies

access. The task will have list access to nomad/jobs in any namespace, because

that path is more specific than the automatic task access to the nomad/jobs

variable. And the task will not have access to nomad/jobs/example (or below)

in namespaces other than prod, because the automatic access rule does not

apply.

See Workload Associated ACL Policies for more details.

Locks

Nomad provides the ability to block a variable from being updated for a period of time by setting a lock on it. Once a variable is locked, it can be read by everyone, but it can only be updated by the lock holder.

The locks are designed to provide granular locking and are heavily inspired by The Chubby Lock Service for Loosely-Coupled Distributed Systems.

A lock is composed of an ID, a TTL, and a lock delay. The ID is generated by the Nomad Server and must be provided on every request to modify the variable's items or the lock itself. The TTL defines the time the lock will be held; if the lock needs to be in place for longer, it can be renewed for as many new periods as wanted.

Once it is no longer needed, it must be released. If by the time the TTL expires, and no renew or release calls were made, the variable will remain locked for at least the lock delay duration, to avoid a possible split-brain situation, where there are two holders at the same time.

Leader election backed by Nomad Variable Locks

For some applications, like HDFS or the Nomad Autoscaler, it is necessary to have multiple instances running to ensure redundancy in case of a failure, but only one of them may be active at a time as a leader.

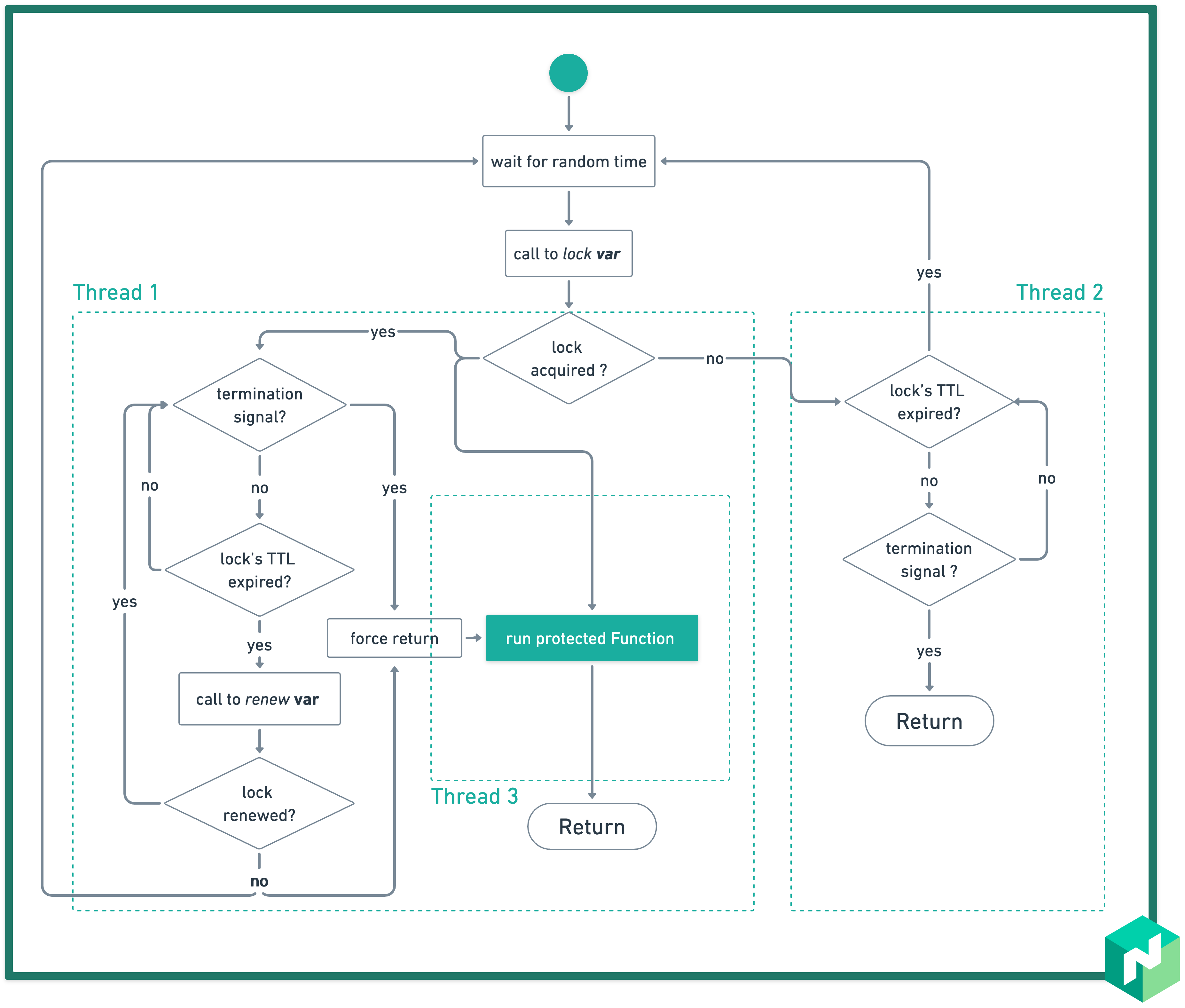

As part of the Go Package, Nomad offers a helper that takes one variable and uses a lock over it as a sync mechanism to run multiple instances but always keeping just one running at any given time, using the following algorithm:

As soon as any instance starts, it tries to lock the sync variable. If it succeeds, it continues to execute while a secondary thread is in charge of keeping track of the lock and renewing it when necessary. If by any chance the renewal fails, the main process is forced to return, and the instance goes into standby until it attempts to acquire the lock over the sync variable.

Only threads 1 and 3 or thread 2 are running at any given time, because every instance is either executing as normal while renewing the lock or waiting for a chance to acquire it and run.

When the main process, or protected function, returns, the helper releases the lock, allowing a second instance to start running.

To see it implemented live, look for the nomad var lock command

implementation or the Nomad Autoscaler High Availability implementation.